# Helper packages

library(dplyr) # for data wrangling

library(ggplot2) # for awesome plotting

library(modeldata)

library(foreach) # for parallel processing with for loops

# Modeling packages

# library(tidymodels)

library(xgboost)

library(gbm)Ensembles Lab 2: Boosting

Introduction

This lab continues on the previous one showing how to apply boosting. The same dataset as before will be used

Ames Housing dataset

Packge AmesHousing contains the data jointly with some instructions to create the required dataset.

We will use, however data from the modeldata package where some preprocessing of the data has already been performed (see: https://www.tmwr.org/ames)

The dataset has 74 variables so a descriptive analysis is not provided.

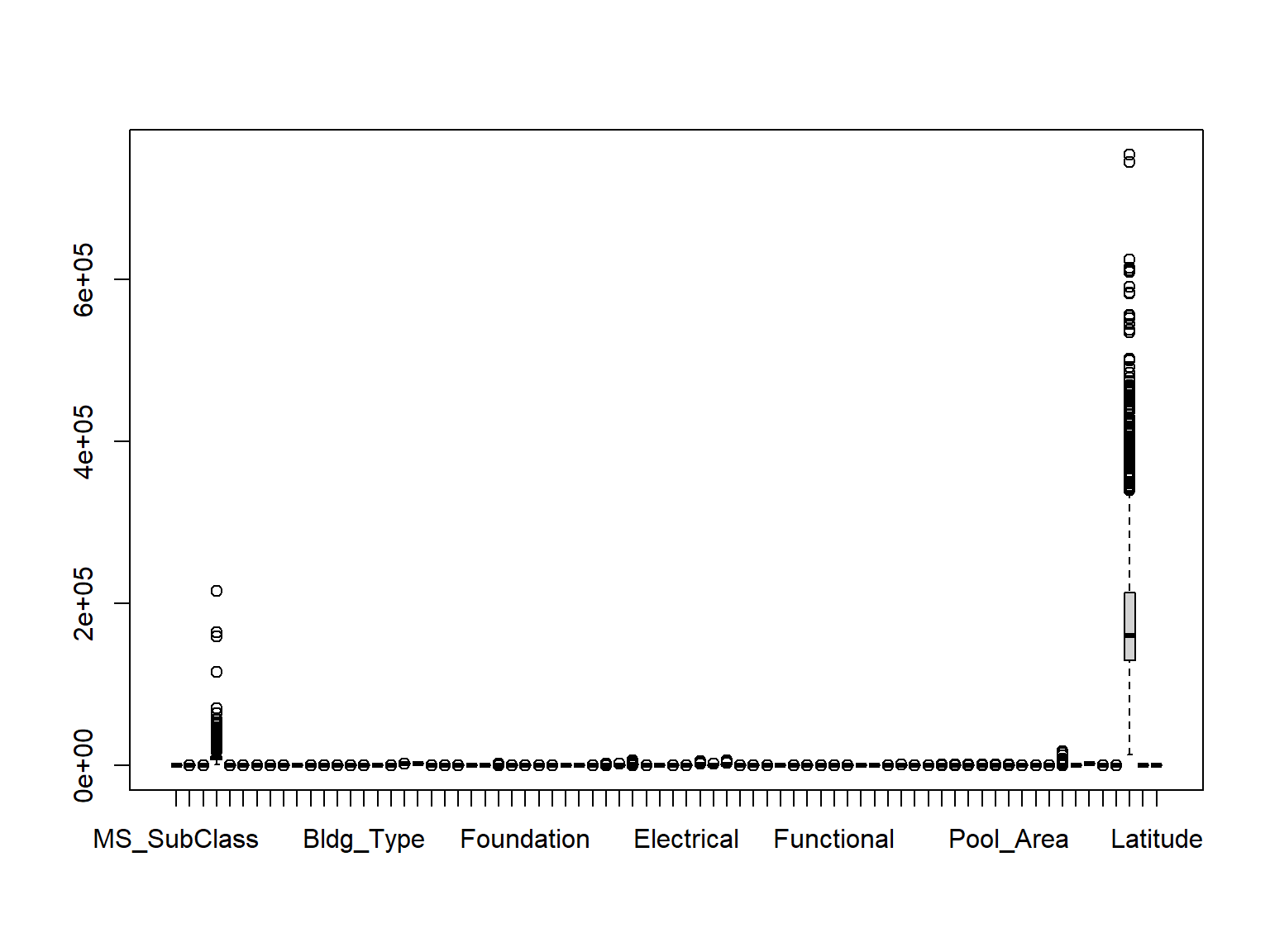

dim(ames)[1] 2930 74boxplot(ames)

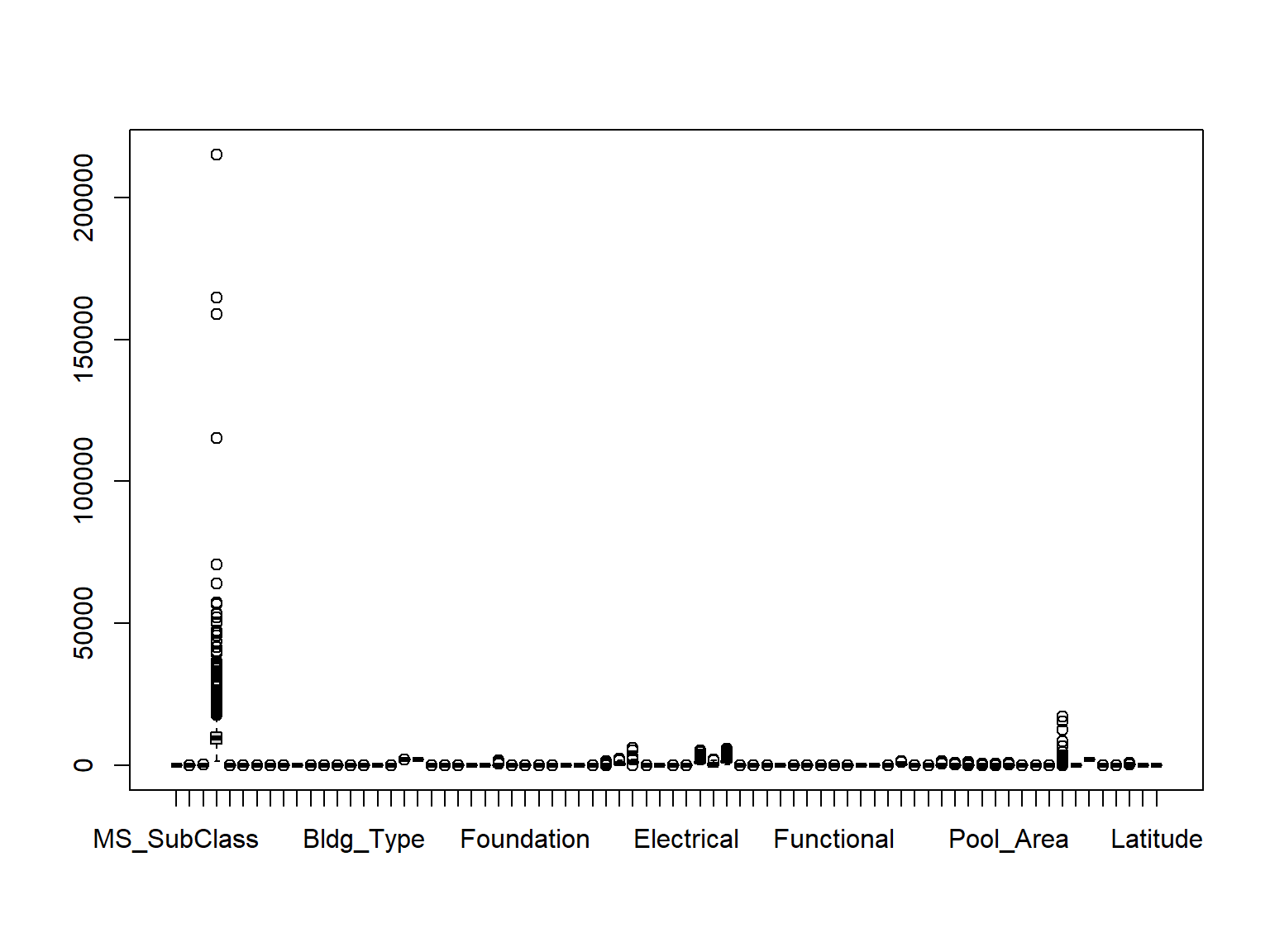

We proceed as in the previous lab and divide the reponse variable by 1000 facilitate reviewing the results .

require(dplyr)

ames <- ames %>% mutate(Sale_Price = Sale_Price/1000)

boxplot(ames)

Spliting the data into test/train

The data are split in separate test / training sets and do it in such a way that samplig is balanced for the response variable, Sale_Price.

# Stratified sampling with the rsample package

set.seed(123)

split <- rsample::initial_split(ames, prop = 0.7,

strata = "Sale_Price")

ames_train <- training(split)

ames_test <- testing(split)Fitting a boosted regression tree with xgboost

XGBoost Parameters Overview

The xgboost() function in the XGBoost package trains Gradient Boosting models for regression and classification tasks. Key parameters and hyperparameters include:

- Parameters:

params: List of training parameters.data: Training data.nrounds: Number of boosting rounds.watchlist: Validation set for early stopping.obj: Custom objective function.feval: Custom evaluation function.verbose: Verbosity level.print_every_n: Print frequency.early_stopping_rounds: Rounds for early stopping.maximize: Maximize evaluation metric.save_period: Model save frequency.save_name: Name for saved model.xgb_model: Existing XGBoost model.callbacks: List of callback functions.

XGBoost Parameters Overview

Numerous parameters govern XGBoost’s behavior. A detailed description of all parameters can be found in the XGBoost documentation. Key considerations include those controlling tree growth, model learning rate, and early stopping to prevent overfitting:

- Parameters:

booster[default = gbtree]: Type of weak learner, trees (“gbtree”, “dart”) or linear models (“gblinear”).eta[default=0.3, alias: learning_rate]: Reduces each tree’s contribution by multiplying its original influence by this value.gamma[default=0, alias: min_split_loss]: Minimum cost reduction required for a split to occur.max_depth[default=6]: Maximum depth trees can reach.subsample[default=1]: Proportion of observations used for each tree’s training. If less than 1, applies Stochastic Gradient Boosting.colsample_bytree: Number of predictors considered at each split.nrounds: Number of boosting iterations, i.e., the number of models in the ensemble.early_stopping_rounds: Number of consecutive iterations without improvement to trigger early stopping. If NULL, early stopping is disabled. Requires a separate validation set (watchlist) for early stopping.watchlist: Validation set used for early stopping.seed: Seed for result reproducibility. Note: useset.seed()instead.

Test / Training in xGBoost

XGBoost Data Formats

XGBoost models can work with various data formats, including R matrices.

However, it’s advisable to use xgb.DMatrix, a specialized and optimized data structure within this library.

ames_train <- xgb.DMatrix(

data = ames_train %>% select(-Sale_Price)

%>% data.matrix(),

label = ames_train$Sale_Price,

)

ames_test <- xgb.DMatrix(

data = ames_test %>% select(-Sale_Price)

%>% data.matrix(),

label = ames_test$Sale_Price

)Fit the model

set.seed(123)

ames.boost <- xgb.train(

data = ames_train,

params = list(max_depth = 2),

nrounds = 1000,

eta= 0.05

)

ames.boost##### xgb.Booster

raw: 872.5 Kb

call:

xgb.train(params = list(max_depth = 2), data = ames_train, nrounds = 1000,

eta = 0.05)

params (as set within xgb.train):

max_depth = "2", eta = "0.05", validate_parameters = "1"

xgb.attributes:

niter

callbacks:

cb.print.evaluation(period = print_every_n)

# of features: 73

niter: 1000

nfeatures : 73 Prediction and model assessment

ames.boost.trainpred <- predict(ames.boost,

newdata = ames_train

)

ames.boost.pred <- predict(ames.boost,

newdata = ames_test

)

train_rmseboost <- sqrt(mean((ames.boost.trainpred - getinfo(ames_train, "label"))^2))

test_rmseboost <- sqrt(mean((ames.boost.pred - getinfo(ames_test, "label"))^2))

paste("Error train (rmse) in XGBoost:", round(train_rmseboost,2))[1] "Error train (rmse) in XGBoost: 14.31"paste("Error test (rmse) in XGBoost:", round(test_rmseboost,2))[1] "Error test (rmse) in XGBoost: 22.69"Parameter optimization

Extra lab “labs/Lab-C.2.4b-BoostingOptimization.qmd” shows how to optimize some important parameters for boosting.